Problem?

Supercollider makes it easy to vary parameter values by squiggling the mouse using the UGens MouseX and MouseY. Sometimes I play with these to find that the squiggling itself produces nice results, but then the thought of having to spell out all these x and y values manually makes me sigh. This made me think about how to automate squiggling - not by sending some mouse commands to the window manager, but by using mathematical formulas that describe squiggles.

Approach?

Parametric equations! Enough said. Let's get started building up some intuitions and then applying them to create interesting squiggles. Note: all figures in this article are made with

Desmos calculator, a very easy to use online tool to experiment with (amongst other things) parametric equations. Highly recommended for designing your own squiggles.

Note: also see

part II of this blog post on a much simpler approach to automating squiggles using a record and playback method.

Prerequisites?

You will benefit from some basic insights in maths, including vectors and trigonometry (or perhaps you can pick some of it up as you read along). If you want to run the supercollider examples you will also need some basic familiarity with

supercollider. The things I will discuss have many applications outside the realm of sound synthesis and algorithmic composition (like in graphical design), so you can also read the blog post to get some insights for usage in other domains.

Ok then... let's go

Here we will discuss parametric equations with a single parameter ("time") that are used to describe 2-dimensional curves. The power of parametric equations comes from the fact that they can specify a motion in x (left-right) and y (up-down) separately, which gives it powers beyond those of simple mathematical functions of the form y = f(x). In parametric equations you have two equations, one for x and one for y. Both are described in terms of the parameter t. You can think of x(t) as the value of x for time t and similarly y(t) describes the value of y for time t. By plotting (x(t),y(t)) for each value of parameter t in a 2d space, we get a curve that represents the squiggle.

In mathematics, the parameter t in the parametric equations is usually rescaled in such a way that the curve is completely described as t varies from 0 to 1. We will come back to this later, when we discuss why this is not necessarily the best choice for usage in a supercollider application.

Building intuitions with points

Suppose we write the following equations:

( x(t), y(t) ) = (0, 0)

These say that no matter what the value of t is, the outcome is always (0,0). If we plot (0, 0) in a 2d space, we get a single point.

Suppose we write:

( x(t), y(t) ) = ( 10, -20 )

Then the point moves to location (10, -20). So far, no surprises. Adding a positive constant to the x component moves the scribble (a point here, really) to the right, adding a negative constant to the x component moves the scribble to the left, and adding positive or negative constants to the y component moves the point respectively up or down.

.

Building intuitions about lines

Points are boring, right?... let's vary the x position as t progresses. A simple example would be:

( x(t), y(t) ) = ( t, 0.6) for ( 0 <= t <= 1)

As time t increases, so does x (since x = t). Parameter y on the other hand remains at 0.6. As a result we expect a horizontal line to appear at height 0.6. This is shown below for 0 <= t <= 1. (If you are unsure how this line results from the above, try putting a few values of t between 0 and 1 in the equations, and plot the results manually on graph paper.)

Suppose we want to shift this line to the left? Just add a negative constant to x (same as with the point!) Suppose we want to shift this line up? Just add a positive constant to y (same as with the point!). In fact

you can always add constants to x and y to shift the curves to wherever you want.

Suppose we want to extend the line (make it longer)? We have a few options. We can either:

- Change the domain of t (the valid values of t), e.g. choose 0 <= t <= 2 instead of 0 <= t <= 1 as before.

- Or we can rescale time, that is, we can e.g. replace all occurrences of t in the equation with a new parameter q = t/2 (which implies t = 2*q), so we get ( x(q), y(q) ) = ( 2q, 0.6). If we now still use the same bounds for q as we did for t, i.e. 0 <= q <= 1, we've effectively made time run twice as fast, so the line will have doubled in length. If you wanted to keep the original line length after the replacement with the new parameter, you would also have to update the domain of q as follows: 0 <= t <= 1, so 0 <= 2q <= 1, so 0 <= q <= 0.5.

- Third option is to stretch the x component by doing a multiplication. You can always stretch the x or y component by doing multiplication with a constant.

Mathematically, both options for changing the length of a curve yield the same results, but when we realize the line in supercollider, the chosen option will make a difference. If you rescale time, it will also impact the speed with which the squiggle is realized (drawn). This means that rescaling time while also updating the bounds is a way to speed up or slow down the generated squiggles without affecting the curve.

Here's another neat trick: if we replace all occurrences of t in the equations with (tend - t) we can make time run backwards, i.e. the squiggle is then generated backwards, or in this case from right

to left. The symbol tend here means the last value t can get, e.g. if we chose the domain of t as 0 <= t <= 2, then tend would be 2. Note that in supercollider it will be easy to reverse time directly without having to resort to these mathematical tricks to make it look as if time flows backwards even if in reality it flows forwards.

Horizontal lines are just as boring as points. Can we do tilted lines? Well yes, of course. The key to tilted lines is to realize that in order to draw a tilted line you need to vary both x and y as time progresses. Let's try this with a few equations:

( x(t), y(t) ) = ( t, t) for (0 <= t <= 1). Here x and y vary equally as t progresses. We get a line under 45 degrees:

( x(t), y(t) ) = (t, 2t) for (0 <= t <= 1). Here we scaled y with a factor two. Therefore we expect the height to rise twice as fast as the width:

( x(t), y(t) ) = ( -t, -t/2 ). Here we scaled x negatively, and scaled y down by a factor 2.

Whenever you multiply the complete x component with -1, the complete graph is mirrored around the x-axis. If you multiply the y component with -1 the graph is mirrored around the y axis. We also divided the y component by two, so it should vary slower in height than x varies in width as t progresses.

Towards more interesting squiggles

As far as squiggles go, lines are boring too. We need to think about how we scribble on a piece of paper. Here's one possible recipe: we move our arm quickly up and down as we move it slowly from left to right. Moving slowly from left to right is easy, that's the line we've been creating in the previous paragraph: x(t) = t. Do we know functions that move up and down all the time? Sure we do! They are called oscillators, and they come in all sizes and flavors: triangular waves, sawtooth waves or simply sine waves. As sine waves are the easiest ones to describe mathematically, let's concentrate on those first. (In supercollider it will be easy to replace one with the other). Let's e.g. take

( x(t), y(t) ) = ( t, sin(

2*(2*pi*t) ) for ( 0 <= t <=

4)

If you count well, you will spot

2*

4 = 8 periods of the squiggle. This is no coincidence. This is how you can vary the density of the squiggle. Now this squiggle is horizontal, but we can apply the intuitions we've built up before to stretch it in all directions, to tilt it or to time-reverse it. E.g. let's tilt it by letting y rise as t rises. This is just a matter of adding the tilt to y(t). This is neat about the parametric equations: you can superpose effects.

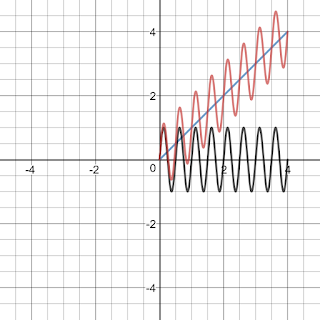

( x(t), y(t) ) = ( t,

t + sin(

2*(2*pi*t) ) for ( 0 <= t <=

4)

In blue you see the line ( x(t), y(t) ) = (t, t). In black you see the squiggle (x(t),y(t)) = (t, sin(2*(2*pi*t))). In red you see both combined: (x(t),y(t)) = (t, t + sin(2*(2*pi*t))). Note that in the combined version, x should not move faster than in the separate versions so for this reason the x component is not changed.

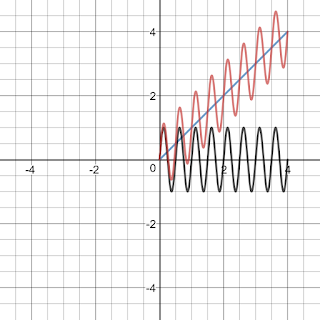

What would the tilted squiggle look like if we oscillated in x direction instead of the y direction? Let's try to find out: (x(t), y(t)) = (t + sin(2*(2*pi*t)), t).

In blue you see the line ( x(t), y(t) ) = (t, t). In black you see the squiggle (x(t),y(t)) = (sin(2*(2*pi*t)), t)). In red you see the combination (x(t),y(t)) = (t + sin(2*(2*pi*t)), t).

What happens if we squiggle in both x and y direction simultaneously?

(x(t),y(t)) = (t + sin(4*pi*t), t + sin(4*pi*t) ).

Ahm... what? That is a disappointment. Surely that can't be right? Right!? But actually this is correct. If you squiggle left and right in perfect sync with squiggling up and down you get a straight line. Things change quite drastically however, if you don't do it in perfect sync. Let's try some examples where we squiggle up and down with the same speed, but different phase:

(x(t),y(t)) = (t + sin(4*pi*t), t + sin(4*pi*t + pi) )

(x(t),y(t)) = (t + sin(4*pi*t), t + sin(4*pi*t + pi/2) )

(x(t),y(t)) = (t + sin(4*pi*t), t + sin(4*pi*t + pi/4) )

What if we squiggled in x and y direction with different frequencies instead? By playing with frequency and phase you can get some really intricate results, e.g.

(x(t),y(t)) = (t + sin(2pi*t - (2*pi/10)), t + sin(3pi*t + pi/3) )

Tapering

With the parametric equations we have a lot of power at our hands to further transform the generated curves. Suppose we want the squiggle to widen as time progresses. We already knew we can widen the squigle by stretching it (multiplying it) with a constant. But now we want the widening to increase as time progresses, so instead of multiplying it with a constant, we want to multiply it with something that increases as time increases. Can you think of such a thing? The answer is quite obvious once you know it: we can multiply it with time t itself! (Or some scaled and shifted version of it). Let's try out the idea on our tilted squiggle. We'll add tapering by multiplying the squiggly parts (the sines) with t/4:

(x(t),y(t)) = (t + t/4*sin(4*pi*t), t + t/4*sin(4*pi*t + pi) )

If instead of linear tapering you wanted quadratic tapering, you would multiply with a quadratic tapering function (here I've increased the domain of t to 0 <= t <= 8 to better show the quadratic shape). (x(t), y(t)) = (t + t^2/16*sin(4*pi*t), t + t^2/16*sin(4*pi*t + pi))

Bending squiggles

There's really no limit to what you can do. Suppose I wanted to take the linearly tapered squiggle and bend it around a circle with radius 10. Remember that we tilted the squiggled line by adding a horizontal version to a tilted line. Perhaps we can do the same with a circle? The parametric equation for a circle with radius 10 is (x(t),y(t)) = ( 10*sin(t), 10*cos(t) ) for 0 <= t <= 2pi. As before we can reparametrize the equation to make the bounds compatible with the tapered squiggle. We want to vary t from 0 to 4 instead of from 0 to 2pi. This means that we introduce a new parameter q = (4*t/(2pi)), which implies t = (2pi)*q/4. Then indeed 0 <= t <= 2pi, so 0 <= q*(2pi)/4 <= 2pi, so 0 <= q <= 4.

The circle equation therefore becomes (x(q), y(q)) = (10*sin(2pi*q/4), 10*cos(2pi*q/4)). And we can now just replace symbol q with symbol t again (technically this is a different t than before, one that now has compatible bounds) to get (x(t), y(t) ) = (10*sin(2pi*t/4), 10*cos(2pi*t/4)).

Let's see what happens if we add the tapered squiggle to this circle instead (I've increased the number of squiggles to make the effect more obvious:

(x(t),y(t)) = (10*sin(2*pi*t/4) + t/4*sin(16*pi*t), 10*cos(2*pi*t/4) + t/4*sin(16*pi*t + pi) )

.

Can you think of a way to make a squiggly spiral instead of a squiggly circle now (hint: something with the radius of the circle being time dependent)? Or how would you bend it over a quarter circle instead of a complete circle (hint: something with time rescaling of the circle equation)?

Polar equations

You may think by now that you are an absolute master in everything related to parametric equations, but nothing could be further from the truth. Many types of squiggles exist that we cannot easily construct with the insights presented before. One such type of squiggle is that which involves intricate circular movements (think mandala's). And for those types of squiggles, it's usually easier to think in terms of so-called polar equations. Polar equations can easily be translated to parametric equations so the insights from the previous paragraphs are not be lost.

So how are polar equations described? They are described as a variation of radius in function of the angle: radius = r(phi). Such equation can be translated to a parametric equation as follows: (x, y) = (radius*cos(phi), radius*sin(phi)).

Suppose you have a constant radius 1 for all angles phi. What curve do you get? A circle.

r = 1

To convert r = 1 in polar form into parametric form, we can use the formulas given above:

x = r.sin(phi) = sin(phi)

y = r.cos(phi) = cos(phi)

Since phi is just a parameter that varies e.g. from 0 to 2pi, we can rename it to t, also varying from 0 to 2pi (or we can perform a substitution phi = 2pi*t, so that the range of t now varies between 0 and 1 instead of 0 and 2pi).

How can be make this a squiggly circle? By varying the radius as phi changes. We want the radius to oscillate as phi changes: we can again use sine, triangular or sawtooth waves, e.g.

r = sin(6*phi) + 2

In parametric equations, this would be:

x = r*sin(phi) = (sin(6*phi) + 2)*sin(phi)

y = r*cos(phi) = (sin(6*phi) + 2)*cos(phi)

Or a spiral with r(phi) = 0.1*phi/(2*pi), which in parametric equations becomes

(x(phi), y(phi) ) = (r*sin(phi), r*cos(phi)) = (0.1*phi/(2*pi)sin(phi), 0.1*phi/(2*pi)cos(phi))

Or maybe you want to scribble something more chaotic like r = cos(0.95*phi/8) (for 0 <= phi <= 40*pi):

Or something more aesthetic like this rose? r = phi + 2*sin(2*pi*phi) + 4*cos(2*pi*theta)

Bezier curves

(image courtesy of https://plus.maths.org/content/bridges-string-art-and-bezier-curves)

Nothing special here. With everything you know already some equations should suffice.

- Bezier curve segment specified with 2 control points (i.e. a line between known points (x1,y1) and (x2, y2)): (x(t), y(t)) = (x1 - t(x2- x1), y1 - t(y2-y1)) for 0 <= t <= 1

- Bezier curve segment specified with 3 control points (x1, y1), (x2, y2) and (x3, y3): (x(t), y(t)) = ((1-t)^2*x1 + 2(1-t)t*x1 + t^2*x2, (1-t)^2*y1 + 2(1-t)t*y1 + t^2*y2)

- You can concatenate several 3-control Bezier curve segments to form larger curves. You can also create higher order Bezier curve segments using more than 3 control points. The formulas can be found on wikipedia.

Concatenation and periodization of parametric curves

In some environments (like in supercollider Synths) it is difficult to perform if-then-else. The lack of if-then-else makes it hard to concatenate parametric equation segments. Supercollider luckily offers the Select UGen which allows one to fake if-then-else by evaluating all branches and selecting results from only one of them.

As an alternative, one can also concatenate the curve segments mathematically. This is explained in the paper "Single equation without inequalities to represent a composite curve" by "E. Chicurel-Uziel", available from Elsevier's Computer Aided Geometric Design (worth reading!).

I won't repeat the complete paper here, but the techniques in the paper are directly applicable to our use cases. The basic idea is to define a step-function that can be used as a "switch" to switch on or off a certain parametric equation in a given interval. This can be combined with retiming of the parametric curve (i.e. substite all "t" with "t-a" to delay the curve with a time units) to concatenate different curves into one bigger curve. They also describe a way to make non-periodic function segments periodic both for cartesian and polar coordinates.

Full explanation falls outside the scope of this blog post (maybe in a later one?), but if you understood everything so far the article itself is not a lot more difficult.

As a supercollider-only alternative, one may also sequence the different curve segments using patterns.

Conclusion

Note that so far we've barely scratched the possibilities. It's not so difficult to imagine how tons of stuff is possible by cleverly combining techniques and by introducing other functions besides polynomials and sines.

Feel free to post your best squiggles in the comments section!

With some techniques under our belt, let's now tackle the implementation in supercollider. As stated before, the aim is to replace MouseX and MouseY with pre-programmed squiggles.

Implementation in supercollider

In the previous sections we've always used an abstract parameter t (or "time") and this parameter t always varied from some lower bound (usually 0) to some upper bound (often 1 or 2pi). Where will this parameter come from in supercollider?

The simplest way of creating a parameter that goes from some lower bound to an upper bound is to use a sawtooth wave. If we use only one period of the sawtooth wave, we generate the complete squiggle exactly once. But if we use multiple periods, the same squiggle is generated multiple times as well.

If instead of a sawtooth wave we use a triangular wave, then we always generated the squiggle from beginning to end, followed by the same squiggle in reverse (time counts down again as the triangle goes down again).

Let's start by taking a synthdef that sounds somewhat interesting with a MouseX and a MouseY.

Run it in supercollider and notice how squiggling with the mouse creates some interesting effects.

Now choose a squiggle. I chose r = sin(5*phi) + 2 in polar coordinates (very similar to the 2nd figure in the section on polar coordinates, only 5 lobes instead of 6). Here's the supercollider implementation. It also displays a scope which, if you put it in X/Y mode will show you the squiggle as it is generated and executed.

By changing "speed" you can traverse the 2d space faster/more slowly, and by changing "rotations" you can traverse the 2d space partially/multiple times (i.e. multiple complete rotations). The "rotations" parameter has no effect on perfectly cyclic squiggles like the flower shape, but it would have an important effect on non-cyclic squiggles, like a spiral.

The only thing that needs to be changed to switch to a different squiggle in polar coordinates is the line that defines "commonpart" and the min and max x and y values of the generated squiggle (and of course speed and rotations can be set to personal taste).

Applying the theory from this post yields us:

However, the following - technically flawed - version actually sounds better... so I will keep it here as well. The mistake in case you are wondering is in confusing the sin operation with the SinOsc oscillator.

The example below can be downloaded from

http://sccode.org/1-57x

And here's another example with the quadratically tapered squiggle (x(t), y(t)) = (t + t^2/16*sin(4*pi*t), t + t^2/16*sin(4*pi*t + pi)):

And of course, nothing stops you from also animating other parameters. There's literally no reason why e.g. you couldn't turn parameter speed into a squiggle as well.

Finally here's a longer example that combines multiple squiggles together and also visualizes them while they are being generated. While implementing this example, it became clear that there are many (MANY!) possible pitfalls you can fall into. But hey... at least the maths never lies.

First the Desmona design:

Next the source code (get it from

http://sccode.org/1-57z )

And finally a screenshot of the thing running:

Have fun!